Evolutive Rendering Models

Fangneng Zhan*

Fangneng Zhan*

Hanxue Liang*

Hanxue Liang*

Yifan Wang

Yifan Wang

Michael Niemeyer

Michael Niemeyer

Michael Oechsle

Michael Oechsle

Adam Kortylewski

Adam Kortylewski

Cengiz Oztireli

Cengiz Oztireli

Gordon Wetzstein

Gordon Wetzstein

Christian Theobalt

Christian Theobalt

Abstract

The landscape of computer graphics has undergone significant transformations with the recent advances of differentiable rendering models. These rendering models often rely on heuristic designs that may not fully align with the final rendering objectives. We address this gap by pioneering an evolutive rendering models, a methodology where rendering models possess the ability to evolve and adapt dynamically throughout the rendering process. In particular, we present a comprehensive learning framework that enables the optimizitation of three principal rendering elements, including the gauge transformations, the ray sampling mechanisms, and the primitive organization. Central to this framework is the development of differentiable versions of these rendering elements, allowing for effective gradient backpropagation from the final rendering objectives. A detailed analysis of gradient characteristics is performed to facilitate a stable and goal-oriented elements evolution. Our extensive experiments demonstrate the large potential of evolutive rendering models for enhancing the rendering performance across various domains, including static and dynamic scene representations, generative modeling, and texture mapping.

Methods

1. Evolutive Gauge Transformation

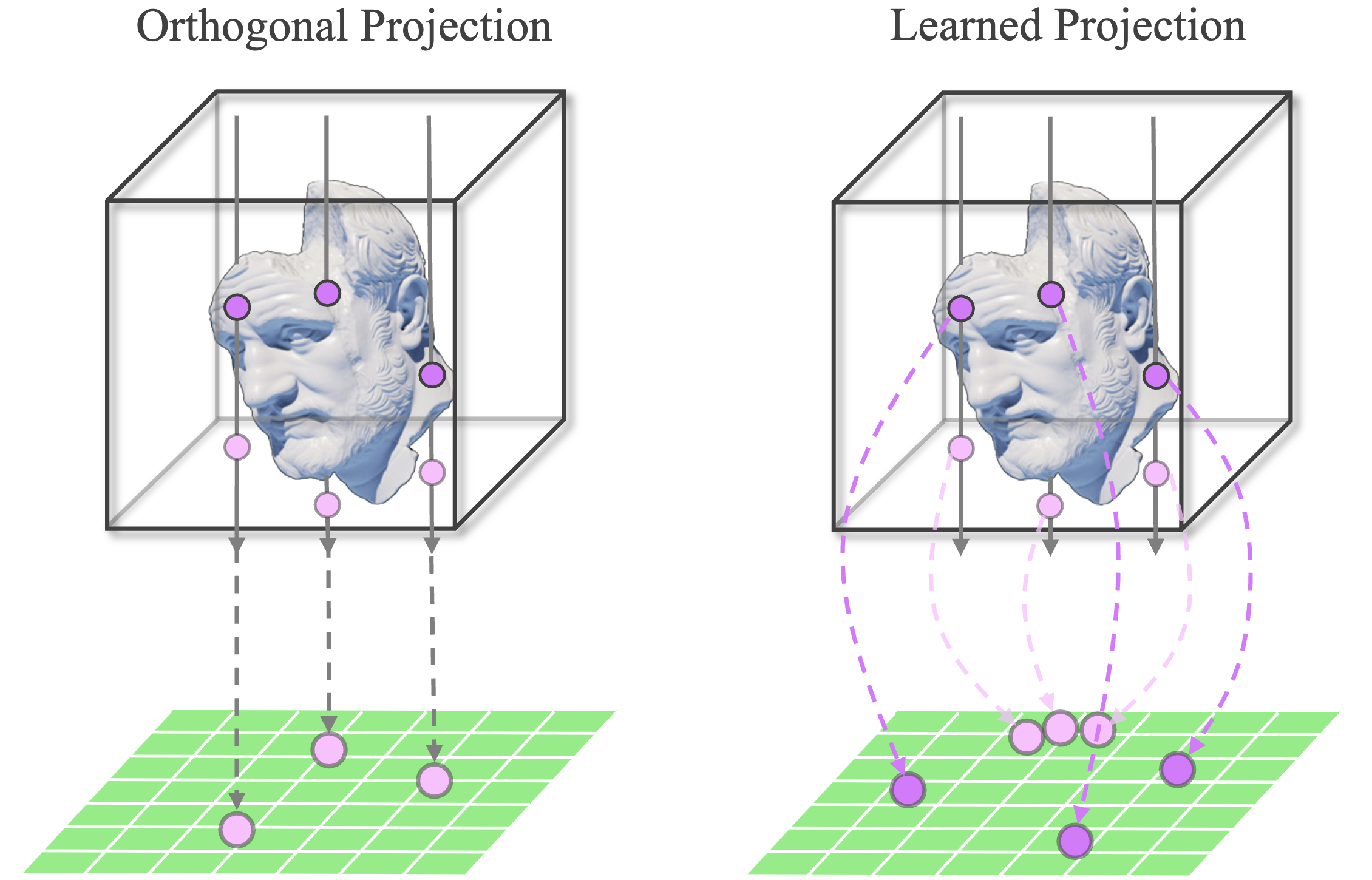

Generally, a gauge defines a measuring system, e.g., pressure gauge and temperature gauge. In the context of neural rendering, a measuring system (i.e., gauge) is a specification of parameters to index a radiance field, e.g., 3D Cartesian coordinate in original NeRF, triplane in EG3D. The transformation between different measuring systems is referred as \textbf{Gauge Transformation}. In radiance fields, gauge transformations are defined as the transformation from the original space to another gauge system to index radiance fields. This additional transform could introduce certain bonus to the rendering, e.g., low memory cost, high rendering quality, or explicit texture, depending on the purpose of the model. Typically, the gauge transformation is performed via a pre-defined function, e.g., an orthogonal mapping in 3D. This pre-defined function is a general design for various scenes, which means it is not necessarily the best choice for a specific target scene. Moreover, it is a non-trivial task to manually design an optimal gauge transformation which aligns best with the complex training objective. We thus introduce the concept of Evolutive Gauge Transformation (EGT) to optimize a desired transformation directly guided by the final training objective.

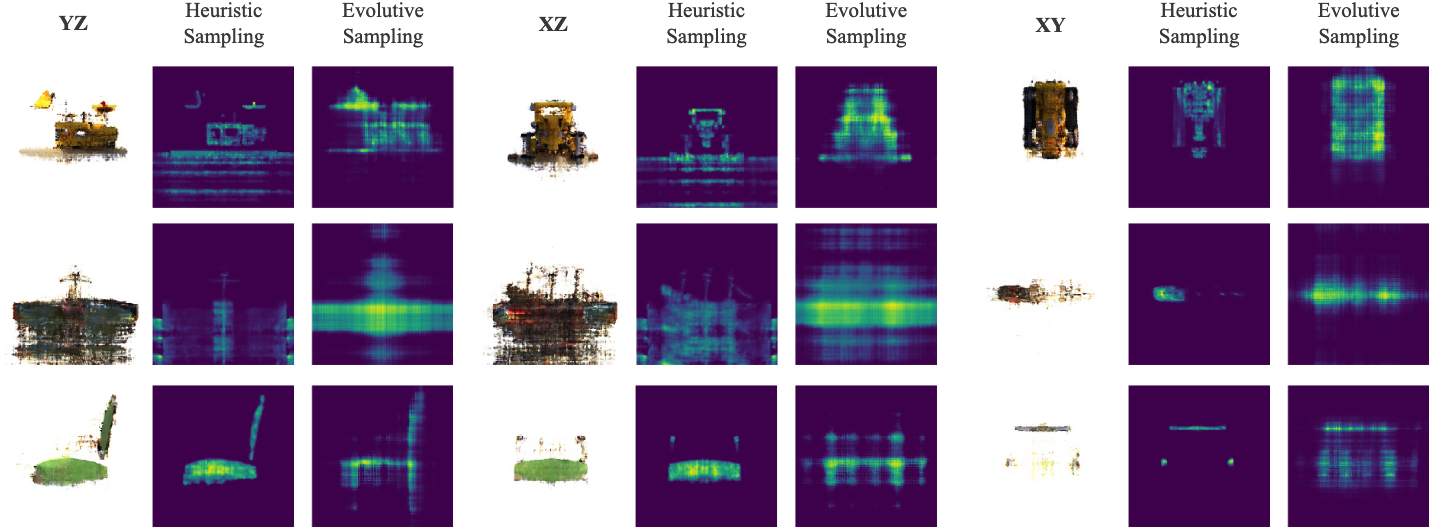

2. Evolutive Ray Sampling

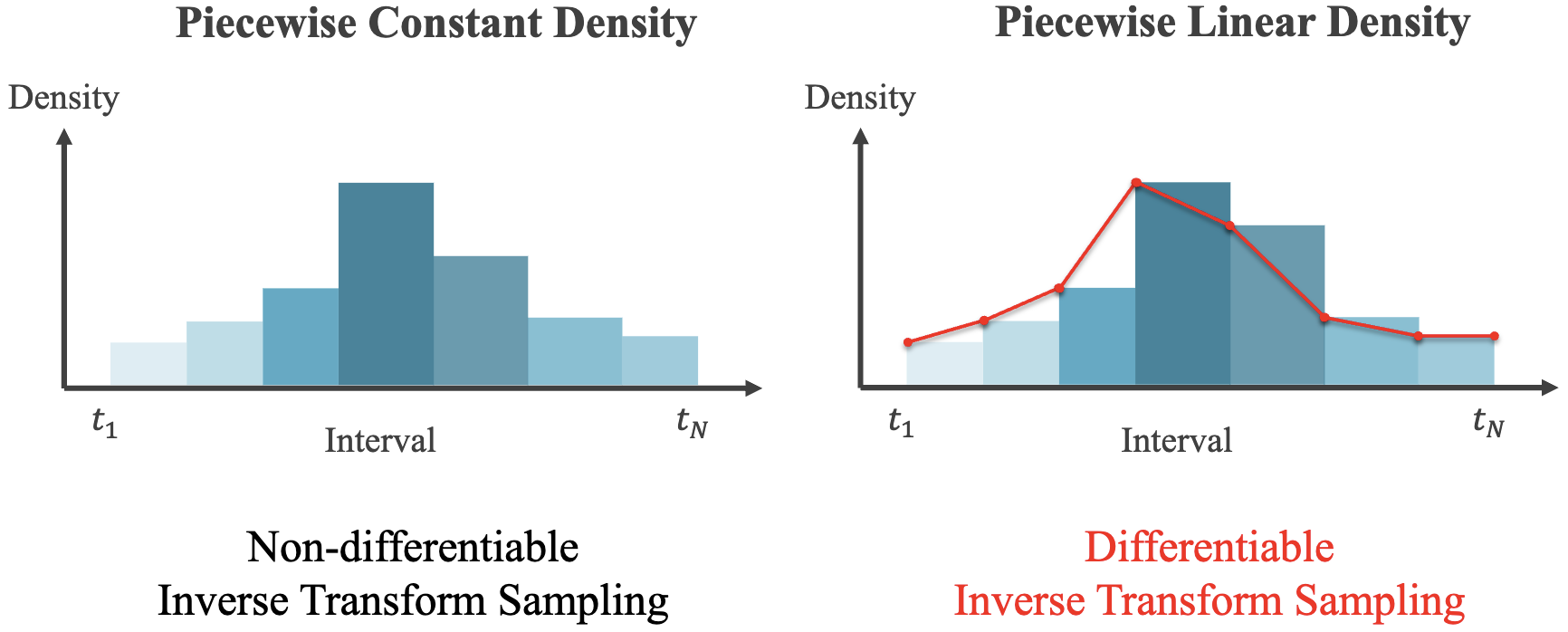

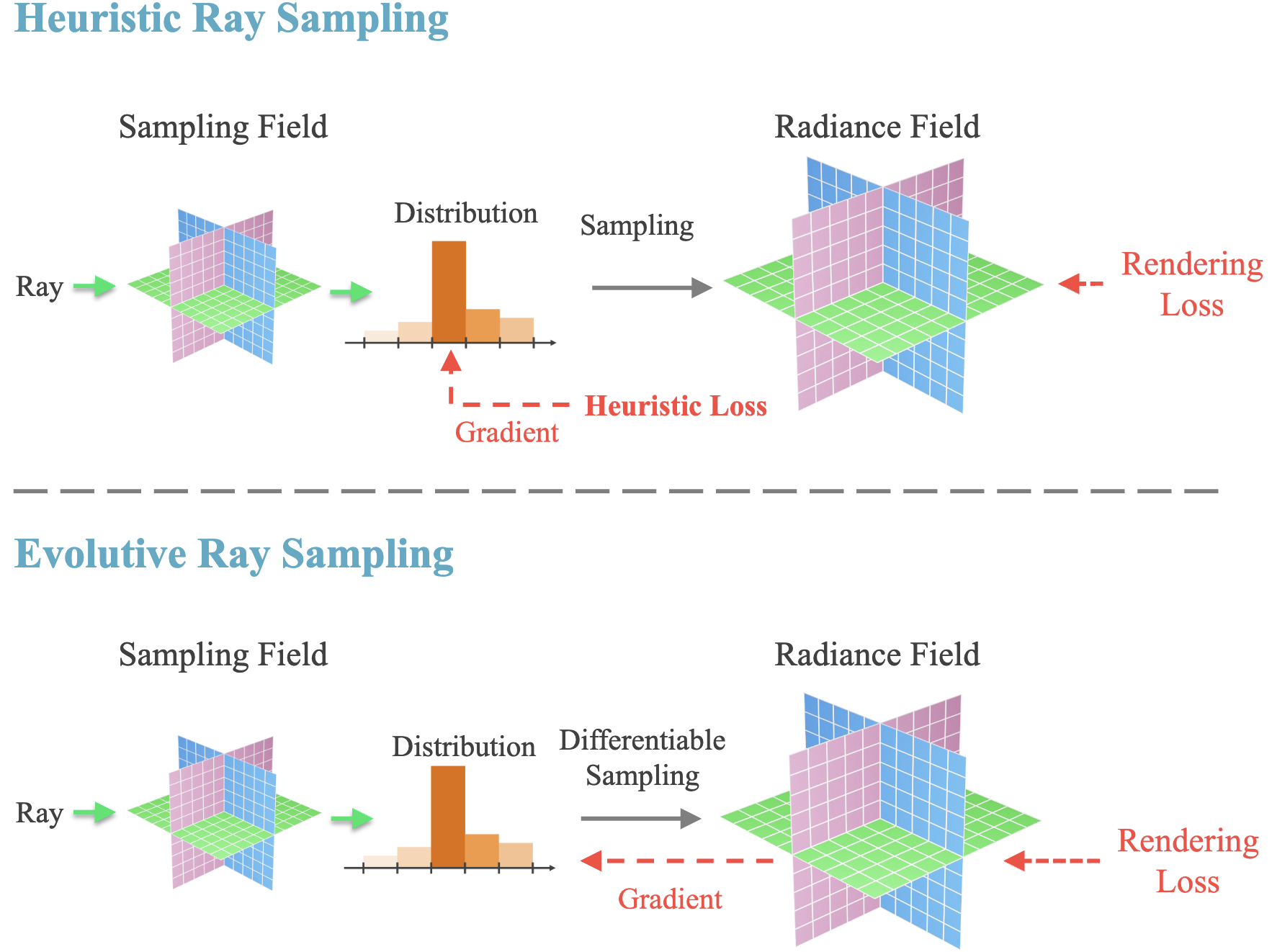

In volume rendering process, densely evaluating the radiance field network at query points along each camera ray is inefficient, as only few regions contribute to the rendered image. Thus, a coarse-to-fine sampling strategy is usually employed to increase rendering efficiency by allocating samples proportionally to their expected effect on the final rendering. To optimize the sampling fields, previous work either treat it as a radiance field trained with photometric loss or distilling the density knowledge from the radiance fields. Thus, all of them are making a heuristic assumption: the best of sampling fields should be aligned with the density fields. However, the objective of sampling field is to select the best set of points for the evaluation of radiance field, while the density field aims to yield the best rendering results. Thus, previous heuristic assumption will bias the optimization objective of sampling fields. On the other hand, it is non-trivial to manually design the training objective for the sampling fields, which should be determined by the radiance fields as sampling fields serve for radiance fields. To this end, we propose to backpropagate gradients from the radiance fields (\ie, rendering loss) to optimize the sampling field directly, eliminating the need to heuristically design auxiliary loss supervision.

3. Evolutive Primitive Organization

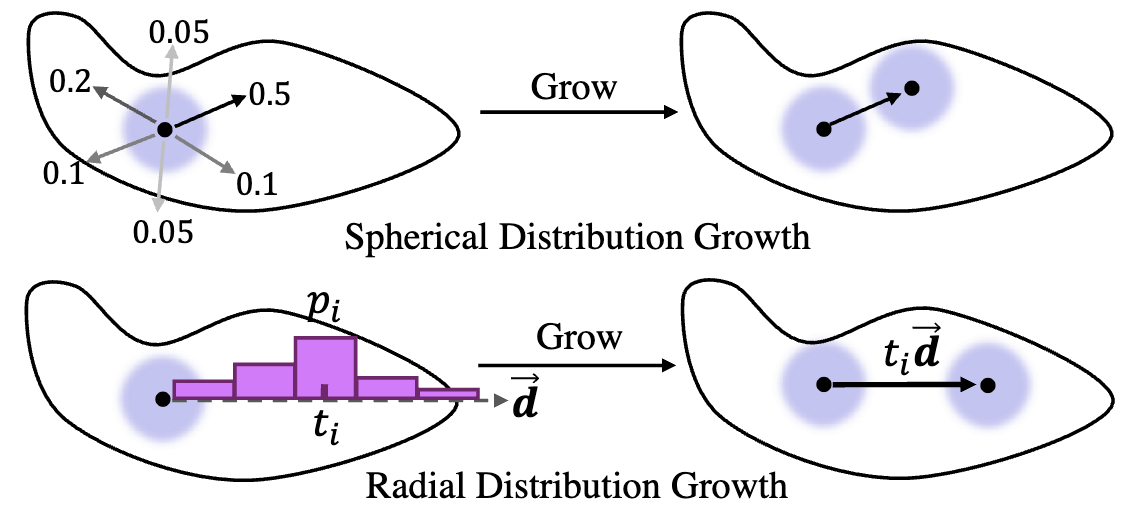

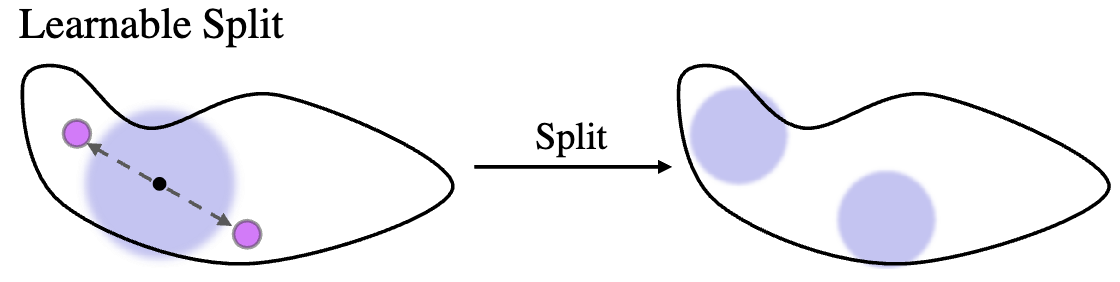

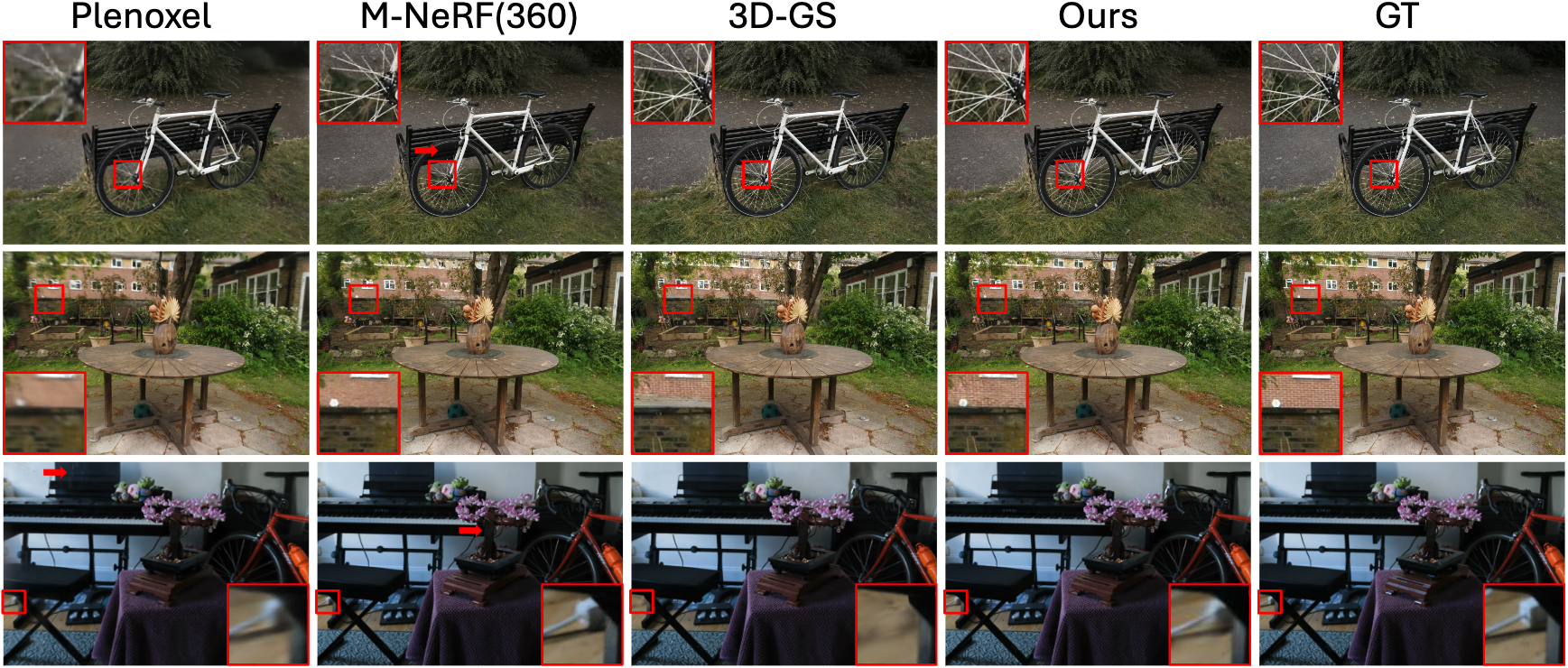

Point-based representations employ a set of geometric primitives (e.g. neural points in Point-NeRF, Gaussians splats in 3D-GS \cite{kerbl20233d}) for scene rendering. These primitives compose of attributes that encode the geometry and radiance field, which can be rendered and optimized via volume render or rasterization operation. They are usually initialized from SFM and optimizing their attributes directly via gradient descent suffers from local minima. Previous techniques try to alleviate this issue in per-scene fitting by employing pre-defined optimization heuristics, such as point growing and pruning in Point-NeRF and Adaptive Density Control in 3D-GS. However, these heuristic operations can be sub-optimal because they are non-differentiable and may misalign with final training objective. Furthermore, their non-differentiable nature also impedes their applicability in cross-scene generalizable settings. We thus propose primitive organization evolution, where scene primitives will be implicitly grown and split during training while maintaining gradient flow directly from training objective. Our proposed approach not only overcomes the challenges posed by non-differentiability but also facilitates the extension of current techniques to feed-forward generalizable settings.

Experiments

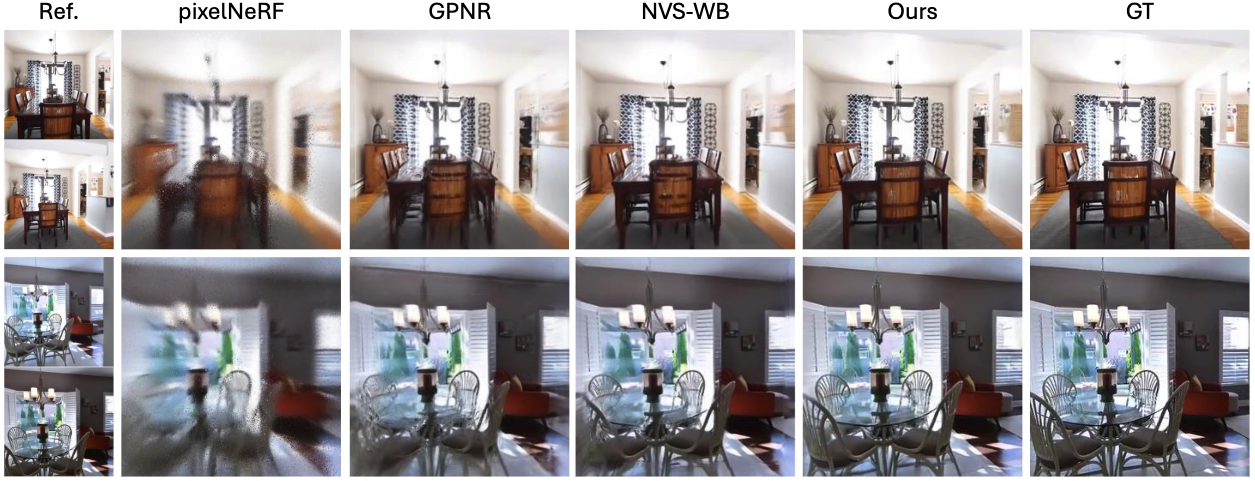

Comparison of Evolutive Gauge Transformation

Visualization of Evolutive Sampling Fields

Rendering with Evolutive Primitive Organization

Comparison of Primitive Organization

Applications

UV Mapping and Editing

Generalizable Gaussian Splatting